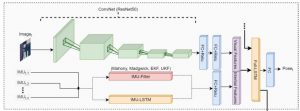

Deep learning (DL) based localization and Simultaneous Localization and Mapping (SLAM) has recently gained considerable attention demonstrating remarkable results. Instead of constructing hand-crafted algorithms through geometric theories, DL based solutions provide a data-driven solution to the problem. Taking advantage of large amounts of training data and computing capacity, these approaches are increasingly developing into a new field that offers accurate and robust localization systems. In this work, the problem of global localization for unmanned aerial vehicles (UAVs) is analyzed by proposing a sequential, end-to-end, and multimodal deep neural network based monocular visual-inertial localization framework. More specifically, the proposed neural network architecture is three-fold; a visual feature extractor convNet network, a small IMU integrator bi-directional long short-term memory (LSTM), and a global pose regressor bi-directional LSTM network for pose estimation. In addition, by fusing the traditional IMU filtering methods instead of LSTM with the convNet, a more time-efficient deep pose estimation framework is presented. It is worth pointing out that the focus in this study is to evaluate the precision and efficiency of visual-inertial (VI) based localization approaches concerning indoor scenarios. The proposed deep global localization is compared with the various state-of-the-art algorithms on indoor UAV datasets, simulation environments and real-world drone experiments in terms of accuracy and time-efficiency. In addition, the comparison of IMU-LSTM and IMU-Filter based pose estimators is also provided by a detailed analysis. Experimental results show that the proposed filter-based approach combined with a DL approach has promising performance in terms of accuracy and time efficiency in indoor localization of UAVs.

Prepared by Abdullah Yusefi, Akif Durdu, M. Fatih Aslan and Cemil Sungur

Link: https://doi.org/10.1109/ACCESS.2021.3049896

Video Link: https://youtu.be/CJeX0lFX1do